I’ve written about ubiquitous AI mandates in a previous issue of the Picnic. And you can read anywhere you like about the extent to which the nature of LLMs causes AI agents to drop the ball on anything from the simplest tasks and demos to complex operations in prod (Pivot to AI and Futurism are chock full of examples).

Add those two facts together, and what you get is that people are being forced to use a technology that consistently gives wrong answers.

The only way to reconcile that is to drop the quality bar for outputs. But some executives think they’ve found a loophole: put the responsibility for correcting the LLM’s mistakes on the people who are mandated to use that LLM. This is being called “human in the loop” to reassure customers (who also know that AI constantly lies) that the final call is still being made by a qualified professional.

In a way, this is a manifestation of the form factor trap: other people say this pattern worked for them, so it must surely work for us.

But it doesn’t work for those other people either. It doesn’t really work for anyone. It’s a bad pattern for automation in general and then separately from that, a bad pattern for LLMs specifically.

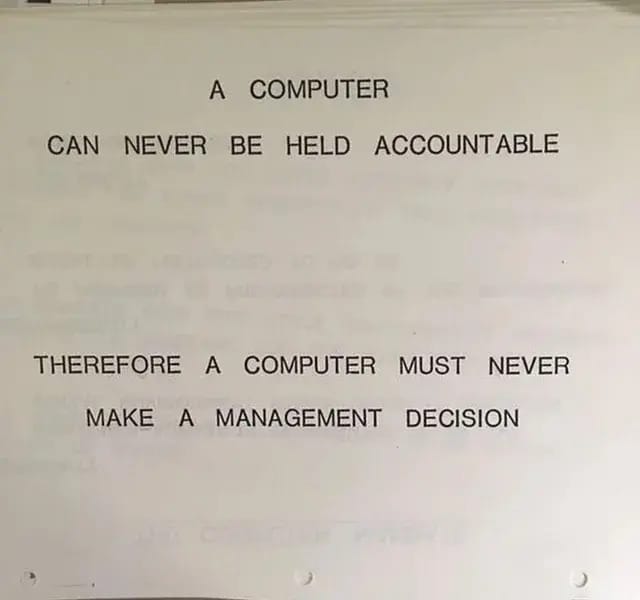

From the famous 1979 IBM presentation

“QA by inspection is a mug’s game”

Automation didn’t emerge onto the scene in Q4 2022. ELIZA (and the associated effect of humans projecting intelligence onto an extremely primitive chatbot) was from 1966. Computer scientists knew that dropping quality was the easiest way to juice velocity for long enough that it was in a textbook by the 1970s.

One of the big problems is the tendency for the machine to dominate the human... consequently an experienced integrated circuit designer is forced to make an unfortunate choice: Let the machine do all the work or do all the work himself. If he lets the machine do it, the machine will tell him to keep out of things, that it is doing the whole job. But when the machine ends up with five wires undone, the engineer is supposed to fix it. He does not know why the program placed what it did or why the remainder could not be handled. He must rethink the entire problem from the beginning.

By the 80s the field was already so advanced that David D. Woods could write (in a publication called The AI Magazine, no less!) a summary of existing scholarship on the practical problems of industrial automation; what he calls “joint human-machine cognitive systems.” Ruth Malan provides some more illustrative excerpts from other books written around the same time.

In the 40 years since Woods was writing, technology may have evolved (although ELIZA still beat ChatGPT) but we’re still using the same Mk 1 Human Brain to employ it productively. And in many ways, the AI Chatbot interface paradigm represents a significant regression in human-computer interaction.

Supervision brings complacency.

10 years ago, I worked on a human-in-the-loop chat system (this would be called “agentic” today) that was extremely specific about how the human was meant to participate: the chat program would kick over a conversation to an operator if it wasn’t 100% sure what to do next. The task for the operator was easier than for Finegold’s circuit designer, because they just had to scroll up a few messages to see what was necessary to unblock the bot, after which it would pick up the workflow again.

But LLMs don’t work like that, and the ways they are productized make it harder, rather than easier, for a human operator to “collaborate” with the AI — because it doesn’t know when it is wrong. The operator has to be on the lookout for errors anywhere at any time, and our brains aren’t set up for that kind of inspection. Especially not when dealing with a system that is, in essence, designed to deceive.

Putting "a human in the loop" is in no way a solution to the problem that all LLMs produce incorrect outputs and cannot be prevented from doing so. Their objective is to produce "plausible text" and therefore preventing the user from noticing the false parts is the success condition.

Output that looks like an answer

There are two parts to your typical AI product: the LLM, and the chatbot interface. Each presents its own problem for our hapless human.

The reason that LLMs stayed relatively obscure until OpenAI packaged one into a chat form factor is that this form factor uses the human-in-the-loop in a specific way: rather than empowering them to do QA, what this setup does is reflect the user’s own humanity to make the chatbot appear more intelligent than it actually is. This can create a lot of problems, down to the charmingly named “AI psychosis“ — and of course guard rails against this outcome are nowhere to be found within those AI adoption mandates.

The issue with LLMs is that they give the illusion of competence. The time savings are so enormous that people just assume it's fine.

Meanwhile, the LLM component of the chat system is not producing an answer; merely text that is statistically likely to resemble an answer. Or rather, text that the user is likeliest to accept. It will do all kinds of things to achieve this, down to replicating human cognitive biases.

The “better” these models get, the more plausible their answers become — and the more cognitive load is placed on the human operator to sift fact from hallucination. It’s harder to read code than to write it; when developers are flooded with a bunch of generated code, the labor required to sift through it to find anything useful is often more than just doing it from scratch.

Diehard prompt engineers will naturally assume that anyone complaining about the low quality of LLM outputs simply didn’t prompt it right. But the actual extent of an LLM’s adherence to a prompt is extremely variable. Statistics, by definition, cannot understand what you want.

The AI system is making all the decisions for you… your specifications are wishes, not instructions.

Rather than getting better at producing right answers, LLMs have only gotten better at acting contrite after doing the wrong thing. Your AI companion may delete all your shit even after you explicitly asked it not to, but at least it now also sends a lovely apology letter.

Human + AI < Human

Another refrain you will have heard many times is “you won’t be replaced by AI, but by a human using AI.” But is that actually true? Are you sure? Because in practice, it seems that using AI is actually making people worse at their jobs and at critical thinking in general.

Something we've observed in many domains, from self-driving vehicles to scrutinizing news articles produced by AI, is that humans quickly go on autopilot when they're supposed to be overseeing an automated system, often allowing mistakes to slip past. The use of AI also appeared to hinder creativity, the researchers found, with workers using AI tools producing a "less diverse set of outcomes for the same task" compared to people relying on their own cognitive abilities.

Humans who rely on an LLM to generate outputs for them do not understand those outputs well enough to do anything with them; the loop closed when the output was generated, no need to think about it or feel any ownership over it. Meanwhile, the reliance on AI is preventing junior entrants into every field from developing the very skills they would need to check that AI’s work.

As always, the technology is not entirely to blame. But the humans at fault are not the users; rather they are the executives who think that any of this represents vision or leadership on their behalf.

Hazel Weakly has a great piece on what AI systems that were actually designed for a human to be in the loop might look like. But in most workplaces, the message is instead “figure it out yourself, or you’re fired.”

— Pavel at the Product Picnic